As we start 2026, artificial intelligence has become less of a competitive advantage and more of a business necessity. Organizations across industries are discovering that success lies not just in adopting AI, but in implementing it thoughtfully, securely, and strategically. We’ve observed firsthand how companies that approach AI with clear guardrails and robust practices consistently outperform those that rush into implementation without proper frameworks.

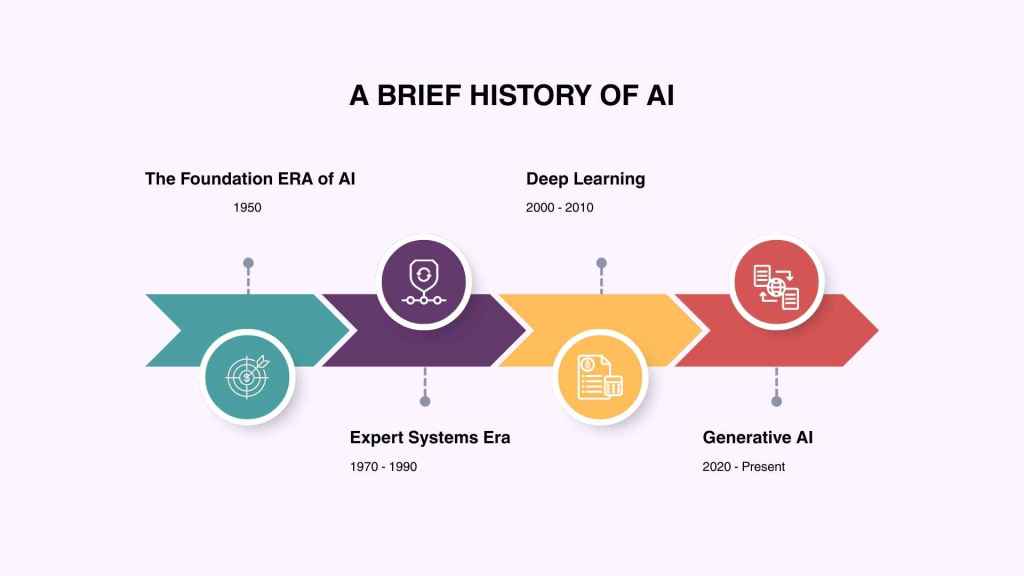

A Brief History of AI and How it’s Reshaping the Workspace

The Foundation Era of AI

The story of AI begins with a simple question: Can machines think? In 1950, Alan Turing introduced the Turing Test, a philosophical framework for evaluating machine intelligence. This theoretical foundation set the stage for what would become one of humanity’s most transformative technologies. By 1956, during the legendary Dartmouth Conference, visionaries coined the term “Artificial Intelligence,” formally launching the field.

The early years produced remarkable milestones. In 1965, MIT professor Joseph Weizenbaum developed ELIZA, a pattern-matching program that simulated human conversations, marking the first AI chatbot. Although primitive by today’s standards, ELIZA laid the groundwork for every conversational AI system that followed, from virtual assistants to ChatGPT.

The Expert Systems Era

The 1970s and 1980s saw AI transition from pure theory to practical application. In 1972, Dendral emerged as an expert system capable of analyzing organic molecules, demonstrating that machines could possess domain expertise. By the 1980s, expert systems were solving real business problems, though they operated within narrow, rules-based frameworks.

The Birth of Deep Learning

The computational landscape shifted dramatically in the 2000s. Geoffrey Hinton’s work on deep learning in 2006 opened new possibilities for machine learning at scale. The breakthrough moment arrived in 2012 when AlexNet won the ImageNet competition by a landslide, proving that deep neural networks could rival human-level performance in image recognition.

The latter half of the 2010s accelerated the pace of innovation. Ian Goodfellow formalized Generative Adversarial Networks (GANs) in 2014, introducing a revolutionary tool for creative AI applications. OpenAI’s founding in 2015 signaled a shift toward AI for broad societal benefit, culminating in the 2019 release of GPT-2, which demonstrated natural language processing capabilities that astounded researchers.

The Generative AI Era

The release of GPT-3 in 2020, trained on 175 billion parameters, far exceeding GPT-2’s 1.5 billion, marked the beginning of the generative AI explosion. Today, generative AI systems can write coherent essays, generate images, code software, and assist with complex business decisions, making AI accessible to non-technical professionals for the first time.

By 2023, generative AI had moved from research labs to everyday business applications. Today in 2026, we’re seeing AI mature from experimental tools into enterprise-grade solutions that require the same governance, security, and strategic thinking as any critical business system.

This historical arc is crucial for understanding 2026’s AI landscape: we’ve moved from theoretical concepts to practical tools integrated into everyday workflows. The question is no longer whether AI will impact work, but how organizations will manage that impact responsibly.

Understanding AI Types and Their Business Applications

Narrow AI vs General AI

Before diving into specific AI applications for business, it’s essential to understand a fundamental distinction that shapes both current capabilities and future expectations: the difference between Narrow AI and General AI.

Narrow AI

Narrow AI (Artificial Narrow Intelligence) refers to AI systems designed to perform specific, well-defined tasks. These systems excel in their niche but cannot transfer knowledge or reasoning outside that domain. Modern AI tools like virtual assistants, recommendation engines, fraud detection models, and large language models such as ChatGPT are all examples of Narrow AI in action. They deliver powerful automation, optimization, and insight, yet they remain bound by the parameters of their training and design.

Examples of Narrow AI

- Virtual Assistants: Siri, Alexa, and Google Assistant handle voice commands and simple queries

- Recommendation Algorithms: Netflix and Amazon systems predict what content or products you’ll like

- Self-Driving Vehicles: Navigate roads using specialized perception and decision-making systems

- Chatbots and Conversational AI: ChatGPT, Claude, and Gemini generate text based on prompts

- Predictive Analytics: Forecasting demand, equipment failure, or customer churn

General AI

General AI (Artificial General Intelligence, or AGI) is a theoretical form of intelligence that could, in principle, perform any intellectual task that a human can do, learning, reasoning, problem-solving, and adapting across contexts without specialized programming. Unlike Narrow AI, which focuses on specific tasks, AGI would have broad reasoning capability and flexibility. However, AGI remains a research aspiration rather than a reality today; no current system meets this definition.

Key Comparisons

| Aspect | Narrow AI | General AI |

| Scope | Task-specific | Broad, human-level intelligence |

| Adaptability | Limited to pre-defined tasks | Capable of learning new tasks autonomously |

| Existence Today | Ubiquitous in business and consumer products | Theoretical, not yet developed |

| Examples | Chatbots, recommendation systems, image recognition | Hypothetical Future Systems |

The Types of AI in the Workspace

A fundamental misconception about AI is that one type fits all needs. In reality, the AI systems businesses deploy fall into distinct categories, each with specific capabilities and limitations.

Generative AI creates new content, from written communications to code and design elements. These systems excel at drafting documents, brainstorming ideas, and automating creative tasks that previously required human input. They’re particularly valuable for marketing teams, customer service, and content creation workflows.

Predictive Analytics AI analyzes historical data to forecast trends, customer behavior, and business outcomes. Sales teams use these tools for pipeline forecasting, while operations departments leverage them for inventory management and demand planning.

Natural Language Processing powers chatbots, sentiment analysis, and document processing. This technology helps businesses understand customer feedback, automate support tickets, and extract insights from unstructured text data.

Computer Vision enables machines to interpret visual information, supporting quality control in manufacturing, document scanning in finance, and security monitoring across facilities.

Robotic Process Automation handles repetitive digital tasks by mimicking human interactions with software systems. While not always considered “true” AI, modern RPA increasingly incorporates machine learning to handle exceptions and improve over time.

The key is matching the right AI type to specific business challenges rather than implementing technology for its own sake.

Implementing AI in the Workspace

Successful AI deployment follows a structured methodology grounded in governance, clear prioritization, and continuous measurement. The following practices have emerged as critical differentiators between organizations that see meaningful ROI and those that struggle with failed pilot implementation.

Establish AI Governance Before Deployment

Organizations that implement governance frameworks before scaling AI see 28% higher staff adoption rates and successful deployment across more than three business areas. Governance should follow a federated model: a central Responsible AI function sets policy and standards, while business units own risk decisions for their specific use cases.

Key governance includes:

- Clear ownership and accountability: Designate an AI Center of Excellence (CoE) responsible for standards, tooling, and shared services, while embedding delivery ownership in individual business functions.

- Risk-based frameworks: Pre-approve vetted model families, prompt patterns, and retrieval connectors for common low-risk use cases, allowing rapid deployment. Establish exception processes with defined timelines.

- Measurable performance metrics: Define unit economics targets (cost per interaction, accuracy thresholds, latency requirements) and monitor drift.

- Explainability requirements: High-risk automations require documented decision logic and human override capabilities.

Prioritize Use Cases by Business Value, Not Technology Capability

Rather than chasing the latest AI techniques, organizations should identify 5–7 high-impact use cases aligned with business outcomes: revenue acceleration, cost-to-serve reduction, risk mitigation, or customer/employee experience improvement. Early pilots should focus on processes where outcomes are measurable and ROI is clear within 90 days.

High-probability use cases include:

- Customer-facing: Sales forecasting, personalized marketing, support automation

- Internal-facing: Report generation, data analysis, hiring support, financial forecasting

- Risk-facing: Fraud detection, compliance monitoring, supply chain forecasting

Build a 90-Day Implementation Roadmap

Effective AI transformation doesn’t happen overnight. Structure implementation into three overlapping phases:

- Day 0-30: Foundation

- Define AI governance, approval protocols, and enterprise-grade platforms

- Identify 2-3 high-impact pilots with accountable owners and clear KPIs

- Communicate strategic intent to leadership, emphasizing governance and guardrails

- Day 31-60

- Execute AI governance, approval protocols, and enterprise-grade platforms

- Identify friction points in workflows, data, access, and adoption

- Present results to leadership and define scaling approach

- Day 61-90

- Extend proven solutions across departments while maintaining governance oversight

- Launch structured upskilling programs focused on AI literacy and decision-making

- Establish a permanent AI oversight committee and publish service levels

Invest in AI Literacy and Skills Development

The World Economic Forum estimates that 44% of workers’ core skills will change by 2027. Organizations must build a culture of continuous learning where employees see AI as a tool for augmentation, not replacement.

Essential Skills include:

- AI literacy: Understanding what AI can and cannot do, recognizing limitations and bias

- Prompt engineering: Crafting effective instructions for generative AI systems

- Data fluency: Interpreting data-driven insights and asking critical questions

- Critical thinking: Validating AI outputs, spotting errors, and making human judgment

The Critical Importance of AI Guardrails

AI guardrails are safeguards that keep AI systems operating safely, responsibly, and within defined organizational boundaries. They are not restrictions that slow innovation; they are enablers that allow responsible, scalable AI adoption.

Why AI Guardrails Matter

Organizations that attempt to ban AI altogether face a familiar challenge: employees quietly use consumer AI tools anyway (ChatGPT, Gemini, Copilot), often uploading sensitive company data without governance. Samsung’s infamous 2024 leak, where employees pasted proprietary source code into ChatGPT, illustrates this risk.

Guardrails flip this dynamic: they enable AI use while protecting the organization.

Essential Components of AI Gaurdrails

AI tools don’t replace human judgment; they augment it. Employees need training not just on how to use AI tools, but on how to evaluate their outputs critically. Create a culture where people understand AI’s capabilities and limitations. Address concerns about job displacement openly and help teams see AI as a tool that frees them from routine tasks to focus on higher-value work.

- Data Loss Prevention (DLP)

- Identify and block sensitive data (customer information, source code, financial data) before it enters AI systems

- Classify data by sensitivity level (public, internal, confidential, restricted)

- Prevent uploading classified information to public AI platforms

- Enforce encryption for data in transit to AI systems

- Prompt and Output Controls

- Monitor prompts for attempted data extraction (prompt injection attacks)

- Filter outputs to prevent leakage of regulated or sensitive information

- Implement confidence thresholds: flag low-confidence outputs for human review

- Block generation of prohibited content (misinformation, illegal content, deception)

- Access and Authentication

- Enforce strong authentication for AI system access

- Implement least-privilege access: users can only access data and functions required for their role

- Track who accessed the AI system, what they asked, and what it returned (auditable logs)

- Revoke access immediately when employees leave

- Tokenization and Encryption

- Replace sensitive data (PII, account numbers, proprietary formulas) with tokens before sending to AI

- Encrypt data in transit and at rest

- Use isolated, private AI models for highly sensitive work rather than cloud-based public models

- Apply tokenization at the moment of use to ensure no sensitive data persists in system memory

- Vector Store and RAG Security

- Retrieval-Augmented Generation (RAG) systems retrieve contextual data from databases to improve AI responses

- These vector stores and databases must have strict access controls matching data classification

- Encrypt and air-gap repositories containing sensitive information

- Implement scheduled purging of cached data and embeddings to limit exposure over time

- Human-in-the-loop Oversight

- High-risk decisions (hiring, financial lending, safety-critical choices) must include human review before execution

- AI makes recommendations; humans make decisions

- Maintain audit trails: decisions overridden by humans are recorded and analyzed to improve models

Cybersecurity in the AI Era

AI introduces new cybersecurity challenges that businesses must address proactively. The same powerful capabilities that make AI valuable also make it an attractive target for attackers and a potential vulnerability if not properly secured.

Protecting AI Systems from Attack

Adversarial attacks can manipulate AI systems by feeding them carefully crafted inputs designed to produce incorrect outputs. For example, slight modifications to images can fool computer vision systems, while specially crafted prompts can cause language models to bypass safety restrictions.

Securing Training Data and Models

AI models and training data are valuable intellectual property that requires protection. Data poisoning attacks involve corrupting training data to compromise model behavior.

AI-Driven Security Threats

Attackers are using AI to create more sophisticated phishing campaigns, generate convincing deepfakes, and automate vulnerability discovery. Security awareness training needs to evolve to address these AI-enhanced threats.

Data Privacy and AI Processing

When AI systems process personal or sensitive business information, they can create new privacy risks. Data might be retained in model training, exposed through prompts, or accessed by third-party AI service providers. Implement data minimization principles, carefully review terms of service for AI platforms, and consider on-premise or private cloud deployments for processing sensitive information.

Inadequate AI Incident Response Planning

Traditional incident response plans may not adequately address AI-specific incidents like model compromise, biased outputs causing harm, or AI system manipulation. Update your organization’s incident response procedures to include AI systems, define escalation paths for AI-created issues, and ensure your security team understands the unique characteristics of AI vulnerabilities.

Measuring ROI

AI investments should be measured rigorously against business outcomes. Generic metrics like “hours of AI usage” don’t indicate value creation. Instead, track:

Operational Metrics:

- Processing cycle time reduction (e.g., “report generation reduced from 4 hours to 30 minutes”)

- Error rate reduction in automated processes

- Time freed from routine tasks per employee per week

Financial Metrics:

- Cost savings: FTE hours saved × fully-loaded labor cost

- Revenue impact: Conversion uplift, average deal size increase, customer retention improvement

- Risk reduction: Fraud prevented, compliance violations averted

Organizational Metrics:

- AI literacy scores among employees

- Adoption rate by business function

- Employee satisfaction with AI tools

- Time-to-deployment for new AI use cases

Navigating AI in the workplace in 2026 requires balancing ambition with responsibility. Organizations that combine strategic prioritization, strong governance, human-centered change management, and robust security will unlock substantial competitive advantages, faster workflows, lower costs, higher-quality decisions, and more engaged employees.